HEALTH

AI Powers New Thought-To-Text System Without Need For Brain Implants

Published

7 months agoon

University of Technology Sydney

Researchers in Australia say they have developed the first system for translating silent thoughts into text without the need for invasive surgery or MRI scans, thanks to artificial intelligence.

The technology could help people who can’t speak due to illness or injury, including stroke or paralysis, according to researchers at the University of Technology Sydney’s GrapheneX-UTS Human-centric Artificial Intelligence Center. It could also enable communication between humans and machines, such as allowing a user to control a bionic arm or robot.

Study participants silently read passages of text while wearing a cap that recorded electrical brain activity through their scalp using an electroencephalogram (EEG). The researchers developed an AI model dubbed DeWave, trained on large quantities of EEG data, that can translate EEG signals into words and sentences.

The study marks a leap forward in such assisted communication technologies, which until now have required the surgical implantation of electrodes in the brain, such as Elon Musks Neuralink, or MRI scanning, which isn’t feasible for day-to-day use.

Previous methods also rely on the addition of eye-tracking data to improve accuracy, which the new system doesn’t need.

“This research represents a pioneering effort in translating raw EEG waves directly into language, marking a significant breakthrough in the field,” said Distinguished Professor CT Lin, who led the research alongside first author Yiqun Duan and fellow PhD candidate Jinzhou Zhou from the UTS Faculty of Engineering and IT.

“It is the first to incorporate discrete encoding techniques in the brain-to-text translation process, introducing an innovative approach to neural decoding. The integration with large language models is also opening new frontiers in neuroscience and AI,” Lin said.

The UTS study was conducted using 29 participants, more than previous decoding technologies that were tested on one or two subjects.

Although the EEG signals collected through the portable cap are noisier than those collected via brain implant or MRI, the DeWave AI surpassed previous translation benchmarks thanks to a more robust set of training data.

“The model is more adept at matching verbs than nouns. However, when it comes to nouns, we saw a tendency towards synonymous pairs rather than precise translations, such as the man instead of the author,” said Duan.

“We think this is because when the brain processes these words, semantically similar words might produce similar brain wave patterns. Despite the challenges, our model yields meaningful results, aligning keywords and forming similar sentence structures,” he said.

The translation accuracy score is currently around 40%, but researchers hope to see this move closer to 90%, comparable to traditional speech recognition or language translation programs.

Their study was selected as the spotlight paper at the NeurIPS conference to be held Dec. 12 in New Orleans, La.

TMX contributed to this article.

More From Sci + Tech

-

Loud Booming Heard While SpaceX Falcon Heavy Launches Mission for…

-

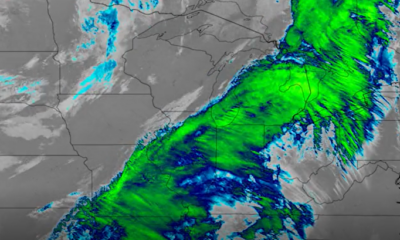

CIRA Satellies: A large low just off of the California…

-

Pasadena, CA: What appears to be a B-2 Bomber flyover…

-

Mexico – Popocatépetl launches plumes of ash into the air…

-

NYC Health Officials Warn Of Spike In Sickness Caused By…

-

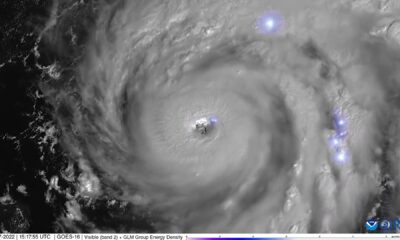

NOAA Satellites show lightning inside Hurricane Ian

-

SpaceX Fined After Rocket Engine Test Puts Worker In A…

-

NASA Celebrates Veterans Day With Tribute Video to Former and…

-

CIRA: Yesterday’s view of wildfires flaring up in Quebec.

-

Satellites Show First Major Cold Front of the Year Set…

-

Bright Meteor Soars Through the Sky in Arkansas

-

Another Angle- NASA Artemis 1 Seen from Clermont, Fl